We know a lot about fake news. It’s an old problem. Academics have been studying it - and how to combat it - for decades. In 1925, Harper’s Magazine published "Fake News and the Public," calling it’s spread via new communication technoloies "a source of unprecedented dange." That danger has only increased. Some of the most shared "news stories" from the 2016 U.S. election - such as Hillary Clinton selling weapons to Islamic State or the pope endorsing Donald Trump for president - were simply made up.

- Home

- Computational Social Science

Computational Social Science

The use of socio-technical data to predict elections is a growing research area. We argue that election prediction research suffers from under-specified theoretical models that do not properly distinguish between ’poll-like’ and ’prediction market-like’ mechanisms understand findings. More specifically, we argue that, in systems with strong norms and reputational feedback mechanisms, individuals have market-like incentives to bias content creation toward candidates they expect will win. We provide evidence for the merits of this approach using the creation of Wikipedia pages for candidates in the 2010 US and UK national legislative elections. We find that Wikipedia editors are more likely to create Wikipedia pages for challengers who have a better chance of defeating their incumbent opponent and that the timing of these page creations coincides with periods when collective expectations for the candidate’s success are relatively high.

The increasing abundance of digital textual archives provides an opportunity for understanding human social systems. Yet the literature has not adequately considered the disparate social processes by which texts are produced. Drawing on communication theory, we identify three common processes by which documents might be detectably similar in their textual features - authors sharing subject matter, sharing goals, and sharing sources. We hypothesize that these processes produce distinct, detectable relationships between authors in different kinds of textual overlap. We develop a novel n-gram extraction technique to capture such signatures based on n-grams of different lengths. We test the hypothesis on a corpus where the author attributes are observable: the public statements of the members of the U.S. Congress. This article presents the first empirical finding that shows different social relationships are detectable through the structure of overlapping textual features. Our study has important implications for designing text modelling techniques to make sense of social phenomena from aggregate digital traces.

"A large body of literature claims that oil production increases the risk of civil war. However, a growing number of skeptics argue that the oil-conflict link is not casual, but merely an artifact of flawed research designs. This article reevaluates whether - and where - oil causes conflict by employing a novel identification strategy based on the geological determinants of hydrocarbon reserves. We employ geospatial data on the location of sedimentary basins as a new spatially disaggregated instrument for petroleum production. Combined with newly collected data on oil field locations, this approach allows investigating the causal effect of oil on conflict at the national and sub-national levels. Contrary to the recent criticism, we find that previous work has underestimated the magnitude of the conflict-inducing effect of oil production. Our results indicate that oil has a large and robust effect on the likelihood of secessionist conflict, especially if it is produced in populated areas. In contrast, oil production does not appear to be linked to center-seeking civil wars. Moreover, we find considerable evidence in favor of an ethno-regional explanation of this link. Oil production significantly increases the risk of armed secessionism if it occurs in the settlement areas of ethnic minorities."

The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. Concern over the problem is global. However, much remains unknown regarding the vulnerabilities of individuals, institutions, and society to manipulations by malicious actors. A new system of safeguards is needed. Bwlow, we discuss extant social and computer science research regarding belief in fake news and the mechanisms by which it spreads. Fake news has a long history, but we focus on unanswered scientific questions raised by the proliferation of its most recent, politically oriented incarnation. Beyond selected references in the text, suggested further reading can be found in the supplementary materials.

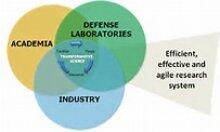

Many companies have proprietary resources and/or data that are indispensable for research, and academics provide the creative fuel for much early-stage research that leads to industrial innovation. It is essential to the health of the research enterprise that collaborations between industrial and university researchers flourish. This system of collaboration is under strain. Financial motivations driving product development have led to concerns that industry-sponsored research comes at the expense of transparency (1). Yet many industry researchers distrust quality control in academia (2) and question whether academics value reproducibility as much as rapid publication. Cultural differences between industry and academia can create or increase difficulties in reproducing research findings. We discuss key aspects of this problem that industry-academia collaborations must address and for which other stakeholders, from funding agencies to jorunals, can provide leadership and support.

Volunteer Science is an online platform enabling anyone to participate in social science research. The goal of Volunteer Science is to build a thriving community of research participants and social science researchers for Massively Open Online Social Experiments ("MOOSEs"). The architecture of Volunteer Science has been built to be open to researchers, transparent to participants, and to facilitate the levels of concurrency needed for large scale social experiments. Since then, 14 experiments and 12 survey-based interventions have been developed and deployed, with subjects largely being recruited through paid advertising, word of mouth, social media, research, and Mechanical Turk. We are currently replicating several forms of social research to validate the platform, working with new collaborators, and developing new experiments. Moving forward our priorities are continuing to grow our user base, developing quality control processes and collaborators, diversifying our funding models, and creating novel research.

Manual annotations are a prerequisite for many applications of machine learning. However, weaknesses in the annotation process itself are easy to overlook. In particular, scholars often choose what information to give to annotators without examining these decisions empirically. For subjective tasks such as sentiment analysis, sarcasm, and stance detection, such choices can impact results. Here, for the task of political stance detection on Twitter, we show that providing too little context can result in noisy and uncertain annotations, whereas providing too strong a context may cause it to outweigh other signals. To characterize and reduce these biases, we develop ConStance, a general model for reasoning about annotations across information conditions. Given conflicting labels produced by multiple annotators seeing the same instances with different contexts, ConStance simultaneously estimates gold standard labels and also learns a classifier for new instances. We show that the classifier learned by ConStance outperforms a variety of baselines at predicting political stance, while the model’s interpretable parameters shed light on the effects of each context.

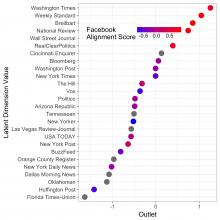

The present work proposes the use of social media as a tool for better understanding the relationship between a journalists’ social network and the content they produce. Specifically, we ask: what is the relationship between the ideological leaning of a journalist’s social network on Twitter and the news content he or she produces? Using a novel dataset linking over 500,000 news articles produced by 1,000 journalists at 25 different news outlets, we show a modest correlation between the ideologies of who a journalist follows on Twitter and the content he or she produces. This research can provide the basis for greater self-reflection among media members about how they source their stories and how their own practice may be colored by their online networks. For researchers, the findings furnish a novel and important step in better understanding the construction of media stories and the mechanics of how ideology can play a role in shaping public information.

Understanding the factors of network formation is a fundamental aspect in the study of social dynamics. Online activity provides us with abundance of data that allows us to reconstruct and study social networks. Statistical inference methods are often used to study network formation. Ideally, statistical inference allows the researcher to study the significance of specific factors to the network formation. One popular framework is known as Exponential Random Graph Models (ERGM) which provides principled and statistically sound interpretation of an observed network structure. Networks, however, are not always given set in stone. Often times, the network is "reconstructed" by applying some thresholds on the observed data/signals. We show that subtle changes in the thresholding have significant effects on the ERGM results, casting doubts on the interpretability of the model. In this work we present a case study in which different thresholding techniques yield radically different results that lead to contrastive interpretations. Consequently, we revisit the applicability of ERGM to threshold networks.

Over the past 12 years, nearly 20 U.S. States have adopted voter photo identification laws, which require voters to show a picture ID to vote. These laws have been challenged in numerous lawsuits, resulting in a variety of court decisions and, in several instances, revised legislation. Supporters argue that photo ID rules are necessary to safeguard the sanctity and legitimacy of the voting process by preventing people from impersonating other voters. They say that essentiall every U.S. citizen possesses an acceptable photo ID, or can relatively easily get one. Opponents argue that that’s not true; that laws requiring voters to show photo ID disenfranchise registered voters who don’t have the accepted forms of photo ID and can’t easily get one. Further, they say, these lallws confuse some registered voters, who therefore don’t bother to vote at all. Opponents also point out that there are almost no documented cases of voter impersonation fraud. Supporters counter that without a photo ID requirement, we have no idea how much fraud there might be.

"Man is by nature a political animal," as asserted by Aristotle. This political nature manifests itself in the data we produce and the traces we leave online. In this tutorial, we address a number of fundamental issues regarding mining of political data: What types of data would be considered political? What can we learn from such data? Can we use the data for prediction of political changes, etc? How can these prediction tasks be done efficiently? Can we use online socio-political data in order to get a better understanding of our political systems and of recent political changes? What are the pitfalls and inherent shortcomings of using online data for political analysis? In recent years, with the abundance of data, these questions, among others, have gained importance, especially in light of the global political turmoil and the upcoming 2016 US presidential election. We introduce relevant political science theory, describe the challenges within the framework of computational social science and present state of the art approaches bridging social network analysis, graph mining, and natural language processing.